Hadoop Stack How Hadoop Stack Works? List of Services

For the first filter, set the property to 'Username' and fill in 'admin' as the value. For the second filter, set the property to 'Operation' and fill in 'QUERY' as the value. Then click 'Apply'. As you click on the individual results, you can see the exact queries that were executed and all related details.

Hadoop Hadoop Tutorial System Requirements for Hadoop Installation

This tutorial takes about 30 minutes to complete and is divided into the following four tasks: Task 1: Log in to the virtual machine. Task 2: Create The MapReduce job. Task 3: Import the input data in Hadoop Distributed File System (HDFS) and Run the MapReduce job. Task 4: Analyze the MapReduce job's output on HDFS.

What is Hadoop and How does Hadoop work? Programming Cube

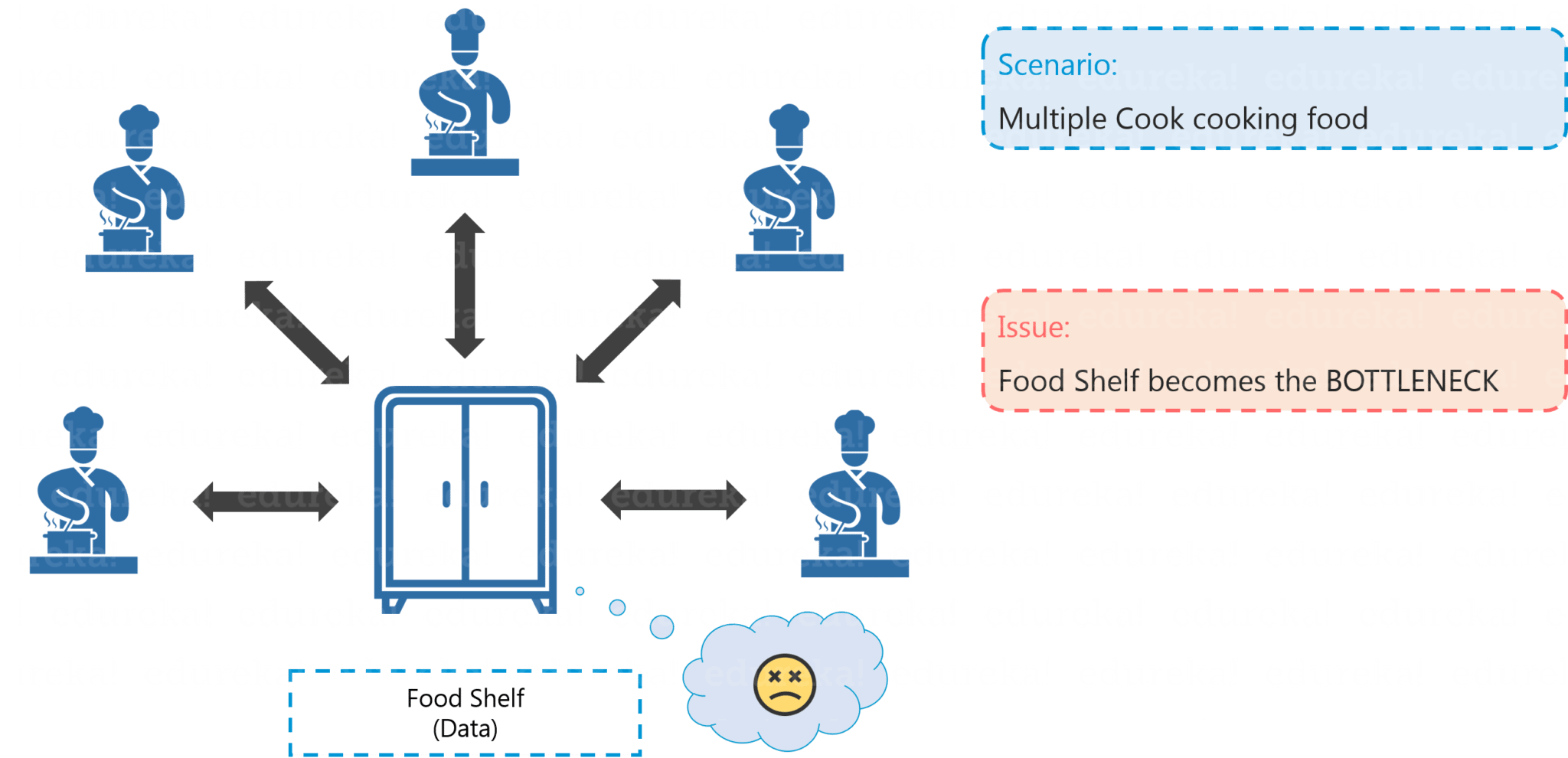

Hadoop is one of the top platforms for business data processing and analysis, and here are the significant benefits of learning Hadoop tutorial for a bright career ahead: Scalable : Businesses can process and get actionable insights from petabytes of data. Flexible : To get access to multiple data sources and data types.

Hadoop Tutorial For Beginners Hadoop Full Course In 10 Hours Big

The Hortonworks Data Platform (HDP) is a security-rich, enterprise-ready open-source Hadoop distribution based on a centralized architecture (YARN). Hortonworks Sandbox is a single-node cluster and can be run as a Docker container installed on a virtual machine. HDP is a complete system to handle the processing and storage of big data. It is an.

Hadoop Tutorial For Beginners Hadoop Ecosystem Core Components In 1

Apache Hadoop Ecosystem. Hadoop is an ecosystem of open source components that fundamentally changes the way enterprises store, process, and analyze data. Unlike traditional systems, Hadoop enables multiple types of analytic workloads to run on the same data, at the same time, at massive scale on industry-standard hardware.

Top 10 Best Laptops For Hadoop for 2024 Laptopified

Hortonworks Data Platform (HDP) is an open source distribution powered by Apache Hadoop. HDP provides you with the actual Apache-released versions of the components with all the latest enhancements to make the components interoperable in your production environment, and an installer that deploys the complete Apache Hadoop stack to your entire.

Hadoop Tutorial Getting Started With Big Data And Hadoop Edureka

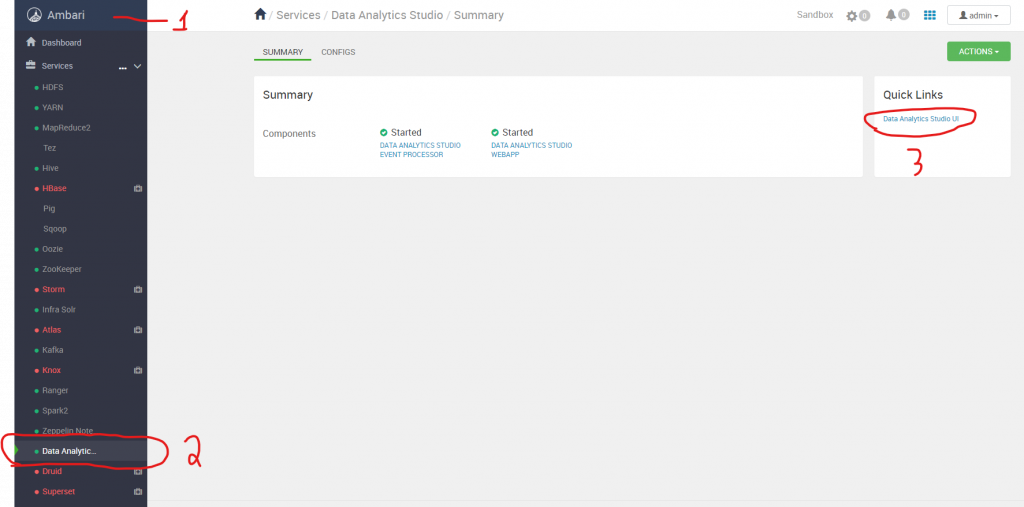

In this course, Getting Started with Hortonworks Data Platform, you will learn how to build a big data cluster using the hadoop data platform. First, you will explore how to navigate your HDP cluster from the Command line. Next, you will discover how to use Ambari to automate your Hadoop cluster. Finally, you will learn how to set up rack.

Hadoop Tutorial Getting Started With Big Data And Hadoop Edureka

We also provide tutorials to help you get a jumpstart on how to use HDP to implement an Open Enterprise Hadoop in your organization. Every component is updated, including some of the key technologies we added in HDP 2.3. This guide walks you through using the Azure Gallery to quickly deploy Hortonworks Sandbox on Microsoft Azure. Prerequisite:

Update "Hadoop Tutorial Getting Started with HDP" Tutorial · Issue

Hadoop Tutorial - Getting Started with HDP This tutorial will help you get started with Hadoop and HDP. We will use an Internet of Things (IoT) use case to build your first HDP application. Zoomdata Faster Pig with Tez Introduction In this tutorial, you will explore the difference between running pig with execution engine of MapReduce and Tez.

Getting Started With HDP Sandbox PDF Apache Hadoop Map Reduce

This tutorial aims to achieve a similar purpose by getting practitioners started with Hadoop and HDP. We will use an Internet of Things (IoT) use case to build your first HDP application. This tutorial describes how to refine data for a Trucking IoT Data Discovery (aka IoT Discovery) use case using the Hortonworks Data Platform.

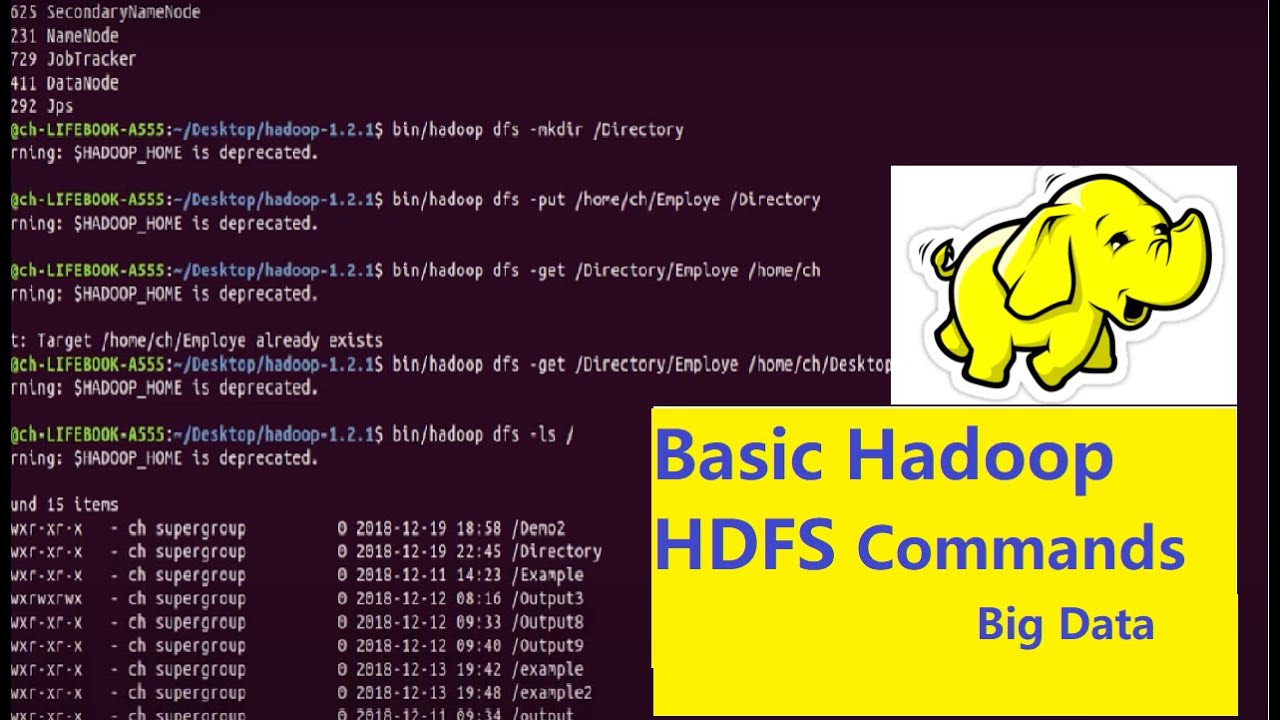

Basic HDFS Commands (Hadoop) For Beginners of Big Data Hadoop Learner

Hortonworks Sandbox provides you with a personal learning environment that includes hadoop tutorials, use cases, demos and multiple learning media. Free down.

Hadoop Tutorial Getting Started With Big Data And Hadoop Edureka

Securely store, process, and analyze all your structured and unstructured data at rest. Hortonworks Data Platform (HDP) is an open source framework for distributed storage and processing of large, multi-source data sets. HDP modernizes your IT infrastructure and keeps your data secure—in the cloud or on-premises—while helping you drive new.

The Definitive Guide To Free Hadoop Tutorial For Beginners

Install and work with a real Hadoop installation right on your desktop with Hortonworks (now part of Cloudera) and the Ambari UI. Manage big data on a cluster with HDFS and MapReduce. Write programs to analyze data on Hadoop with Pig and Spark. Store and query your data with Sqoop, Hive, MySQL, HBase, Cassandra, MongoDB, Drill, Phoenix, and Presto.

[PDF] Get started with Hadoop free tutorial for Beginners

He named the project Hadoop after his son's yellow toy elephant; it was also a unique word that was easy to pronounce. He wanted to create Hadoop in a way that would work well on thousands of nodes, and so he started working on Hadoop with GFS and MapReduce. In 2007, Yahoo successfully tested Hadoop on a 1,000-node cluster and began using it.

Hadoop Tutorial Getting Started With Hdp

While Hive and YARN provide a processing backbone for data analysts familiar with SQL to use Hadoop, HUE provides my interface of choice for data analysts to quickly get connected with big data and Hadoop's powerful tools. With HDP, HUE's features and ease of use are something I always miss, so I decided to add HUE 3.7.1 to my HDP clusters.

Hadoop Tutorial Intro to HDFS YouTube

In this task, we will place the sample.log file data into HDFS where MapReduce will read it and run the job. STEP 1: Create an input directory in HDFS: # hadoop fs -mkdir tutorial1/input/. STEP 2: Verify that the input directory has been created in the Hadoop file system: # hadoop fs -ls /user/root/tutorial1/.